“Dogfooding AI generated end-to-end tests”

Join me for a talk on how we used an LLM-based AI agent to end-to-end test our own web application. I’ll kick off with the baseline assumptions we had when we started to build our product - how far we thought we would be able to get with the state-of-the-art LLMs and how we structured our early prototyping.

In the second half of the talk, I’ll show what we’ve learned from our own experiments and over six months of applying the AI agent to testing our apps. I’ll present examples of some of the challenges for the AI agent and how we are tackling them. I’ll focus on real situations where the AI agent was effective and where it fell short. I’ll present our own dogfooding strategy and how we try to ensure we ship working code, as well as one example where we still failed.

Finally, I’d like to give a little outlook on the future of AI-based software testing from our experience and discuss it with you.

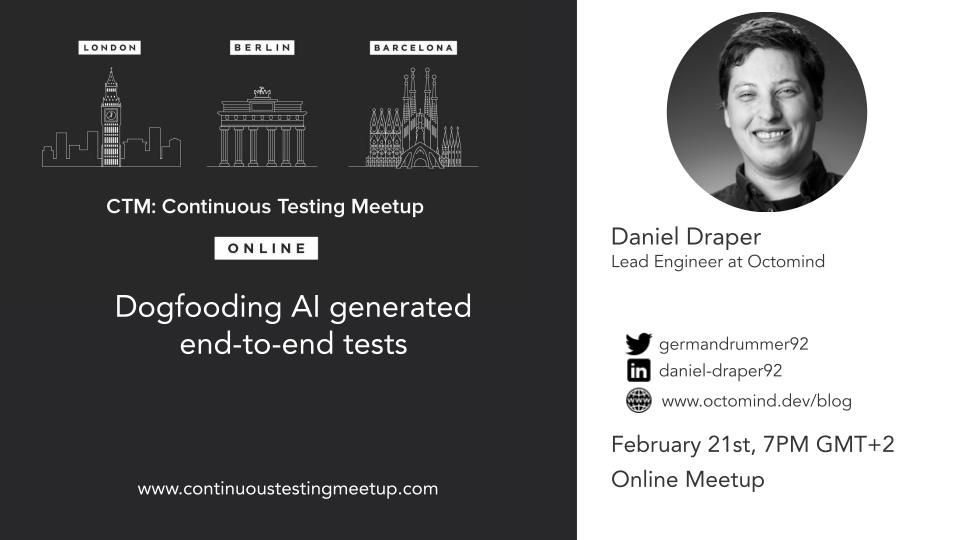

Bio

Daniel Draper is an experienced senior full-stack engineer with a soft spot for machine learning/deep learning and figuring out how to put it into useful products. He’s especially passionate about clean code and believes in building software that is not only working but also well-crafted, well-tested, readable and maintainable. His relationship with software testing is best described by his blog here. When he’s not building software he’s often out hiking + traveling with his wife or playing the drums 🥁 in different orchestras in Karlsruhe, Germany.

Social networks

- -> Github

- -> Author at Octomind blog

- -> Discord